AI’s Energy Hunger: A Looming Crisis?

For the past few years, one of the biggest concerns about the AI boom has been its insatiable demand for energy. AI model training and inference are energy-intensive, consuming gigawatts of power, stressing the electrical grid, and driving up energy prices. Google, OpenAI, and Microsoft have all faced scrutiny over how their expanding AI operations will require entire new energy infrastructures.

Goldman Sachs predicts that by 2030, AI data centers will consume 4.5% of the world’s electricity, up from 1% today. Major power companies, from Exelon ($EXC) to NextEra Energy ($NEE), are scaling up nuclear, renewables, and natural gas to meet this demand. But what if the narrative is shifting?

Enter DeepSeek, a Chinese AI lab that just demonstrated a new model with 10x greater energy efficiency than traditional AI models. If this trend continues, the assumption that AI will double or triple global electricity consumption may be flawed.

This development could have profound implications for the energy markets, AI infrastructure investments, and the long-term scalability of artificial intelligence.

DeepSeek’s Disruption: Smarter AI, Less Power

DeepSeek recently trained an AI model using only 2,000 GPUs, compared to the tens of thousands typically required by companies like OpenAI or Google DeepMind. Reports indicate that DeepSeek achieved a 90% reduction in power consumption while delivering performance close to state-of-the-art models.

How Did DeepSeek Reduce Energy Consumption?

Efficient Model Architectures – New breakthroughs in transformer models have allowed for smarter, more resource-efficient training.

Optimized Compute Hardware – Instead of relying on cutting-edge NVIDIA GPUs, DeepSeek reportedly used less expensive, lower-power chips.

Improved Training Algorithms – By fine-tuning how models learn, DeepSeek reduced redundant computations, cutting energy use.

Smarter Inference Techniques – A key bottleneck in AI power consumption is inference, i.e., running the model in production. If DeepSeek’s inference techniques are also energy-efficient, this could shift the entire AI infrastructure landscape.

These factors suggest that AI does not need to be an energy black hole. The efficiency breakthroughs in training may extend to inference, significantly reducing long-term power requirements for AI data centers.

Implications for AI, Energy, and Investment

The assumption that AI will be an unstoppable force guzzling power has shaped massive investments in energy infrastructure. But DeepSeek’s breakthrough could redefine the economics of AI and its impact on power grids. Here’s what that means for key sectors:

1. AI Infrastructure: Winners and Losers

Winners: Companies focusing on energy-efficient AI chips will thrive. Watch for moves from NVIDIA ($NVDA), AMD ($AMD), and Tenstorrent in optimizing power-efficient AI hardware.

Losers: The idea that data center power demand will double or triple in the next five years may no longer hold. Companies betting on unchecked data center energy expansion, such as some hyperscale REITs, may need to rethink their strategies.

2. Energy Markets: Shift in Demand Growth

Nuclear & Renewables: The AI boom was supposed to be the greatest demand driver for nuclear in decades. While nuclear and renewables are still crucial, the urgency may decrease if AI models require significantly less power.

Data Center Cooling Companies: The demand for liquid cooling solutions, such as those from Trane ($TT) and Carrier ($CARR), may not explode as expected if AI energy consumption stabilizes.

3. The Geopolitical Angle: China vs. U.S. AI Dominance

DeepSeek’s efficiency breakthrough gives China an edge in the AI arms race. If Chinese models can outperform Western counterparts with 90% less energy, it challenges the dominance of NVIDIA and Western AI infrastructure.

U.S. chip sanctions may become less effective if China can do more with less. The AI race might shift toward who can build the most efficient models rather than who has the most powerful chips.

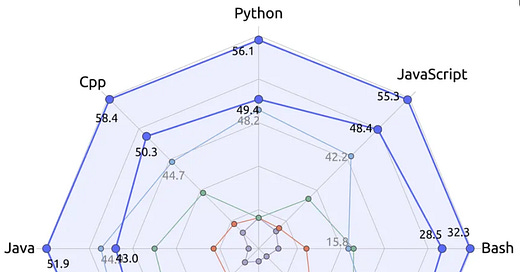

The AI Landscape in 1-3 Years: What’s Coming Next The rise of DeepSeek and open-source AI is reshaping technology, economics, and geopolitics. While it lowers costs and broadens access, real control over AI remains concentrated in compute power, energy, and chip supply chains. Here’s what to expect in the next few years.

1. AI Will Be More Accessible, But Not Fully Decentralized

Open-source AI will lower barriers to entry, leading to an explosion of niche AI applications.

However, true decentralization is unlikely, as compute infrastructure remains concentrated in cloud giants and chipmakers.

Key Question: Will AI truly become decentralized, or are we just shifting control from Silicon Valley to new, but still centralized, players?

Winners: Startups leveraging AI at lower costs, but Big Tech still controls distribution.

2. AI Compute Becomes a New Strategic Battleground

Nvidia will dominate the next-gen AI race with Blackwell GPUs, but AMD, Intel, Huawei, and DePIN projects will challenge its pricing power.

AI compute will be regulated like semiconductors, with nations restricting access to protect their own AI industries.

Key Question: Will AI computation remain centralized in cloud providers, or will distributed computing (blockchain, DePIN, edge AI) take off?

Winners: Companies optimizing AI for efficiency over raw power.

3. AI Costs Drop 90%+, Fueling Ubiquity

AI will be embedded in gaming, business tools, customer service, and creative industries.

Cost savings mean even small players can build AI-driven products that were previously too expensive.

Key Question: With an explosion of AI applications, how do we ensure quality control and ethical standards?

Winners: Companies integrating AI seamlessly into existing user experiences.

Final Outlook (2026): AI is Ubiquitous, But Still Controlled

AI will be everywhere, but power will sit with those who control chips, energy, and distribution.

We’re entering an era where AI isn’t just a tech innovation—it’s an economic and geopolitical weapon.

The world is about to get smarter, faster, and more competitive—but true AI decentralization remains an open question.